Created an 95% accurate neural network to predict the onset of diabetes in Pima indians. Pretty cool!

#http://machinelearningmastery.com/tutorial-first-neural-network-python-keras/

#https://archive.ics.uci.edu/ml/machine-learning-databases/pima-indians-diabetes/

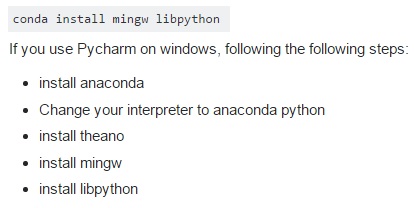

#Using theano. Needed to navigate to c:/users/Alex Ko/.keras/keras.json and change tensorflow to theano

#Create first network with Keras

import keras

from keras.models import Sequential

from keras.layers import Dense

import numpy

import pandas as pd

import sklearn

from sklearn.preprocessing import StandardScaler

# fix random seed for reproducibility

seed = 7

numpy.random.seed(seed)

# load pima indians dataset

dataset = numpy.loadtxt('pima-indians-diabetes.csv', delimiter=",")

#dataset = pd.read_csv('pima-indians-diabetes.csv')

data=pd.DataFrame(dataset) #data is panda but dataset is something else

print(data.head())

# split into input (X ie dependent variables) and output (Y ie independent variables) variables

X = dataset[:,0:8] #0-8 columns are dependent variables - remember 8th column is not included

Y = dataset[:,8] #8 column is independent variable

# http://stackoverflow.com/questions/39525358/neural-network-accuracy-optimization

scaler = StandardScaler()

X = scaler.fit_transform(X)

# create model

model = Sequential()

# model.add(Dense(1000, input_dim=8, init='uniform', activation='relu')) # 1000 neurons

# model.add(Dense(100, init='uniform', activation='tanh')) # 100 neurons with tanh activation function

model.add(Dense(500, init='uniform', activation='relu')) # 500 neurons

# 95.41% accuracy with 500 neurons

# 86.99% accuracy with 100 neurons

# 85.2% accuracy with 50 neurons

# 81.38% accuracy with 10 neurons

model.add(Dense(1, init='uniform', activation='sigmoid')) # 1 output neuron

# Compile model

model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# Fit the model

model.fit(X, Y, nb_epoch=150, batch_size=10, verbose=2) # 150 epoch, 10 batch size, verbose = 2

# evaluate the model

scores = model.evaluate(X, Y)

print("%s: %.2f%%" % (model.metrics_names[1], scores[1]*100))

# calculate predictions

predictions = model.predict(X) # predicting Y only using X

print(predictions)

# Round predictions

rounded = [int(numpy.round(x, 0)) for x in predictions]

print(rounded)

print("Rounded type: ", type(rounded)) # rounded is a 'list' class

print("Shape of rounded: ", len(rounded))

print("Dataset type: ", type(dataset)) # numpy array?

print("Shape of dataset: ", dataset.shape)

# Turn rounded from a 'list' class into a numpy array

newRounded = numpy.array(rounded)

print("Rounded type: ", type(newRounded))

# Add the rounded numpy array (called newRounded) to the end of the dataset numpy array

newDataset = numpy.column_stack((dataset, newRounded))

qwerty=pd.DataFrame(newDataset)

qwerty.to_excel('pimaPredictions.xlsx',sheet_name='sheet1',index=False)

# Create a confusion matrix with the actual values and predicted probabilities

df_confusion = pd.crosstab(Y, newRounded, rownames=['Actual'], colnames=['Predicted'], margins=True)

df_conf_norm = df_confusion / df_confusion.sum(axis=1)

print(df_confusion)

print(df_conf_norm)

End.